- DATE:

- AUTHOR:

- Chris Holton

Face Tracking now available in Designer!

Hey There!

Today, I am delighted to announce the first of what we hope are many incredible updates to ZapWorks Designer in 2023. Today’s deployment offers our creative community a new tracking type to use within your Augmented Reality experiences: Face tracking.

Supported in both ZapWorks Studio and our Universal AR developer SDKs, in this release we are delighted for the first time to be able to offer face tracking to our no-code tool.

This update will allow you to add 2D and 3D elements to AR experiences and attach them to multiple points on a head mask. Users of Designer can immediately make use of Face tracking, here are the key points of the release:

.

Add a Face tracking scene in seconds

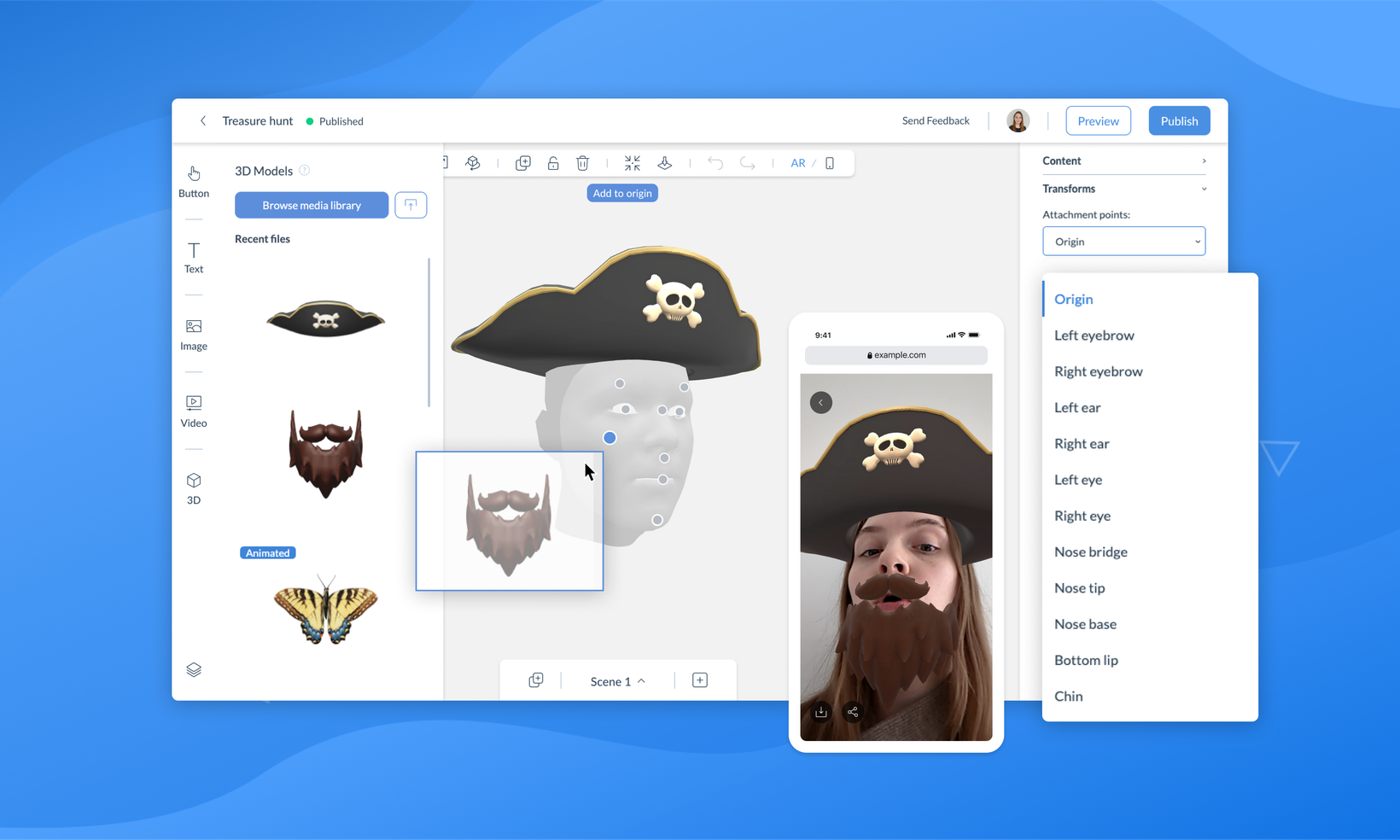

Face tracking is super simple to implement. Either create a new face tracked experience or add a face tracked scene within an existing experience. When created, the face tracking scene will reveal a head mask (model of a head). This acts as your canvas, to which you can drag and drop items from your media library. The assets from the media library will need to be dragged an attachment points within the head mask.

.

Creating a face tracking scene and adding content is super straightforward.

.

Multiple attachment points across the users face

When adding content to a face tracking scene, 12 attachment points will appear in the editor. These attachment points are anchors for the content, you can add things like glasses to a nose bridge or earrings to the left and right ear. You can change the position of the model in relation to it’s attachment point which is handy if you want to add assets which will appear around the head rather than on it.

.

Using attachment points allows content to stay tracked to exactly the right part of the users face.

.

Face tracking, world tracking, image tracking, oh my!

Combine tracking types in Designer and create rich, multi-faceted experiences that enable more engagement with your activation. By blending tracking types, you are unconstrained to tell longer stories and take users on more rewarding journeys.

.

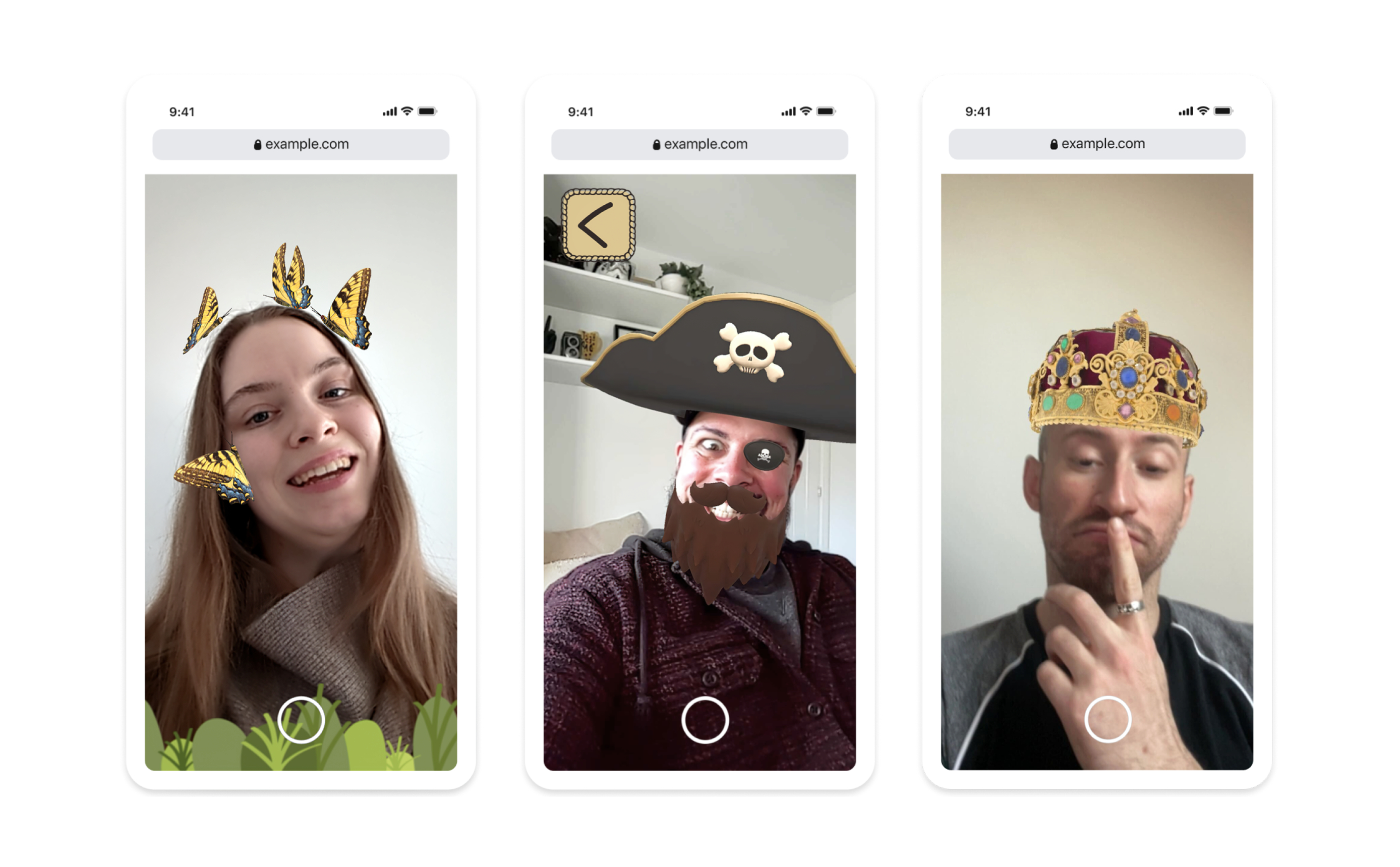

Combine Face Tracked Scenes with Screen UI and photo snapshots

Screen UI functionality adds additional interactivity to your experiences such as a photo capture button and customisable navigation options. Encourage your audience to capture the moment and then share it with their friends. To add branding, you can add additional objects such as image frames and logos or assets such as visors, stickers or hats. Users can then capture and share their selfies which will get more people aware of your experience and most importantly your brand.

Combining screen UI and face tracking allows your end users to take selfies to send to their friends.

.

Face tracking works with AR Web Embed

Allow consumers to try before they buy with a virtual try-on. Boost conversion rates for accessories such as hats, headphones and sunglasses, launching from a 3D AR Web Embed on your e-commerce store.

.

.

Thank you as always for your feedback and support, we hope that you love this awesome new feature as much as we do. As always, you can check out helpful articles for getting starting on our documentation site. In addition, Zappar's wonderful Product Designer, Lucy, has put together an awesome follow-along tutorial showing you how to build a full project combining face tracking with the other tracking types.

.

Big thank you for the team for all their hard work on this latest update, it's been such a fun work to work on I look forward to seeing even more of your incredible projects in the coming weeks and months.